Playground

The Playground tab provides a real-time environment to test and interact with your AI assistant. This feature allows you to evaluate the assistant’s responses to queries and its behavior when performing actions, helping you refine its performance and identify areas for improvement.

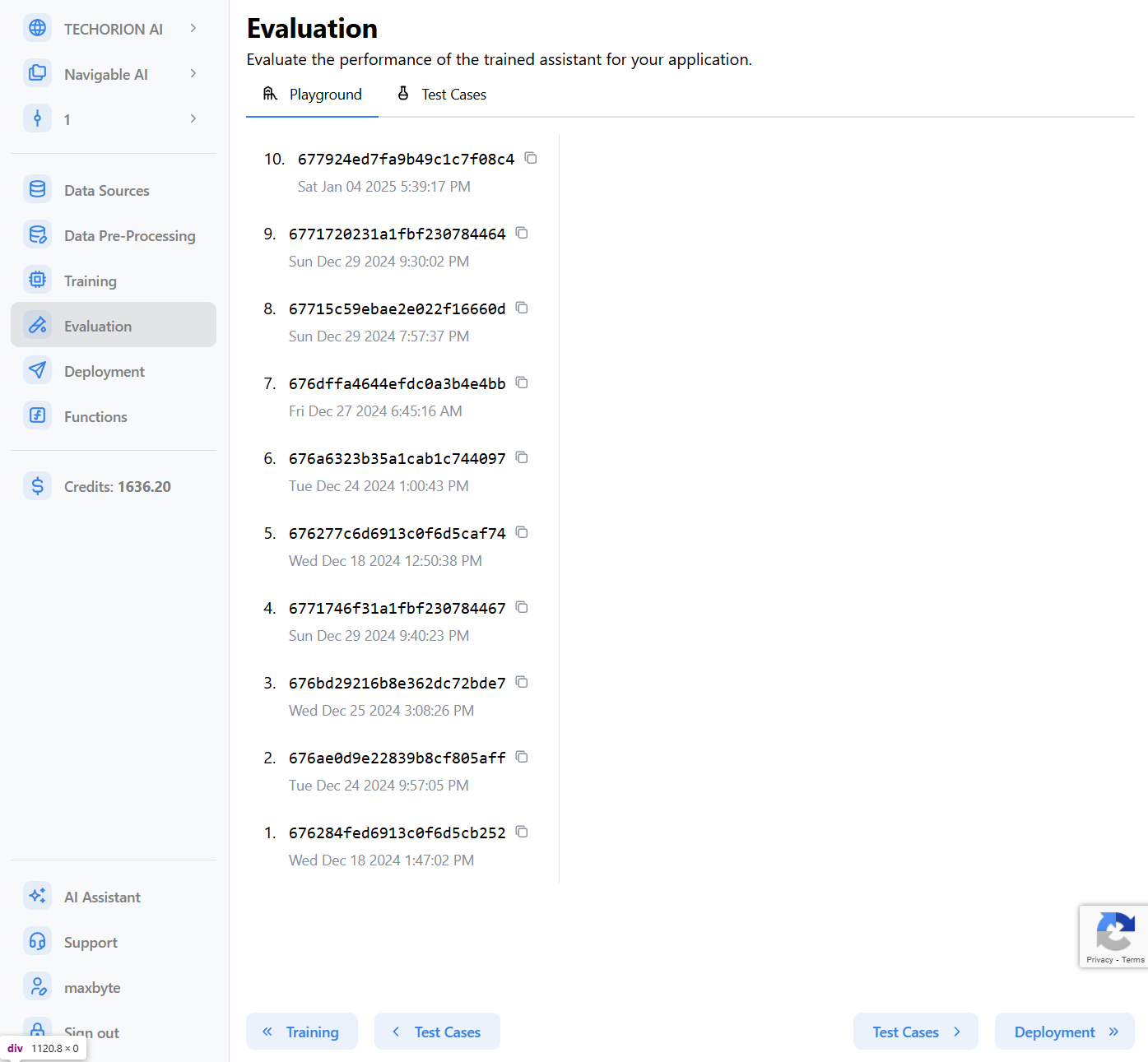

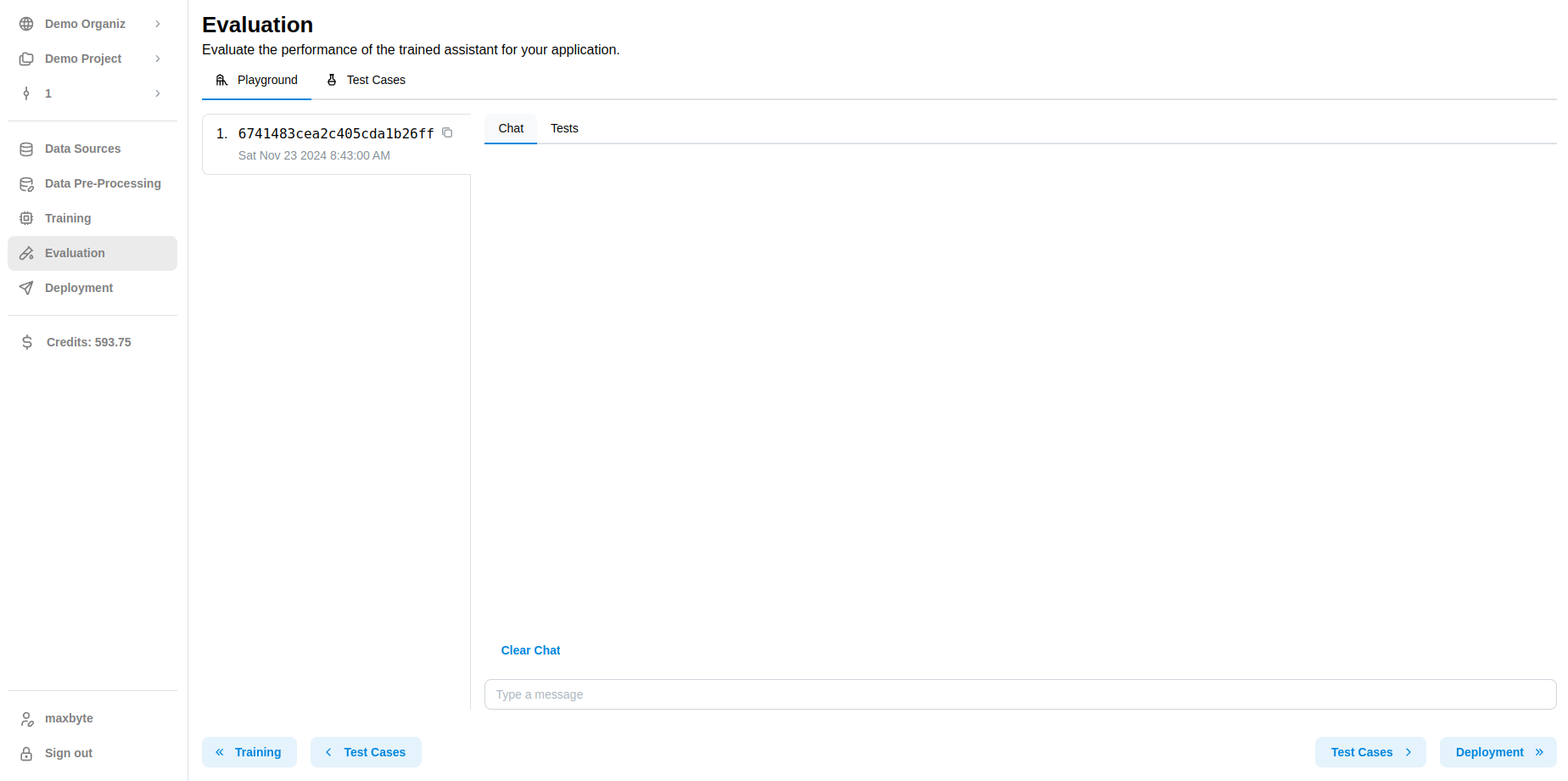

The left side of the Playground tab provides a list of fine-tuned models, with their ID and training completion date and time. You can switch between models to test different assistants.

Using the Playground

-

Select a model from the list.

-

Navigate to the Chat tab.

-

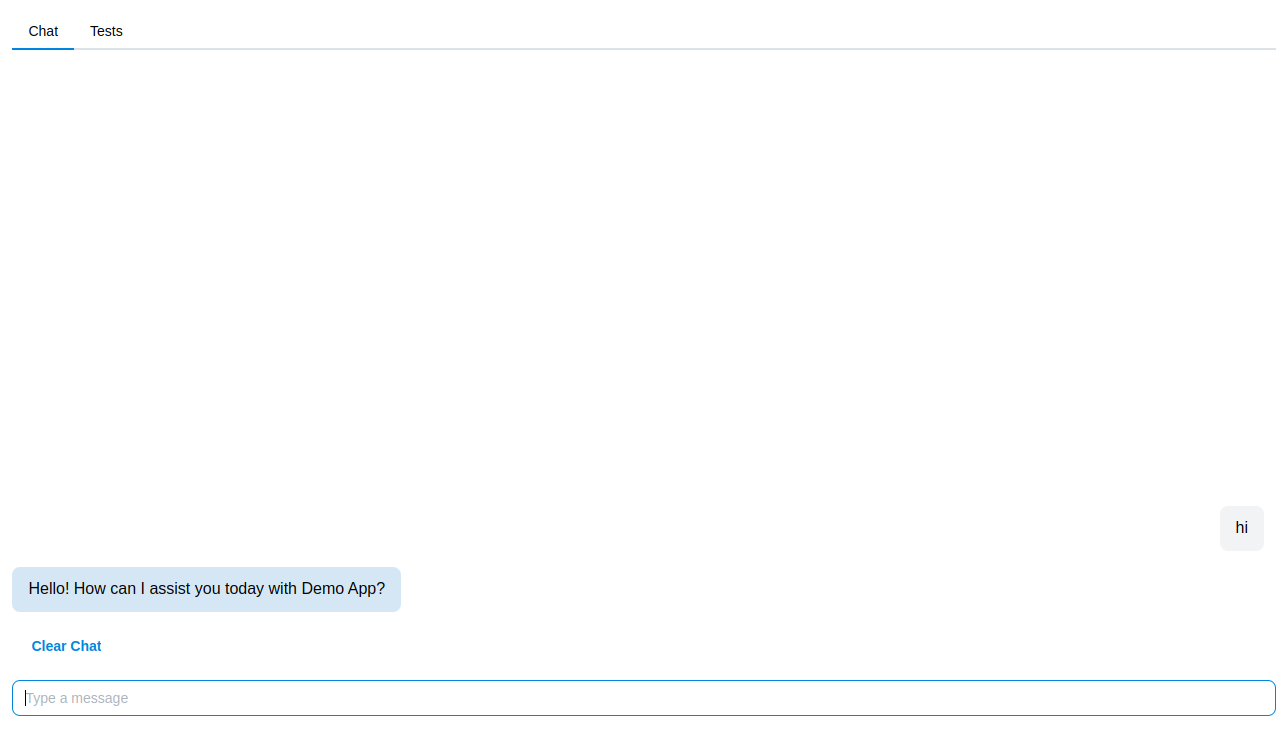

Type a message and hit the Enter key. Alternatively, you can click the Send button.

The assistant will respond to your message.

info

infoIf you have configured any actions, they will show up alongwith the message as CTA (Call To Action) buttons.

You can clear the chat anytime by clicking the Clear Chat button.

Playground Options

- Suggest Actions: If you have trained the assistant with pages or actions, you can see suggested actions in the chat. This can be toggled on or off.

- System Prompt: You can select a custom system prompt that you have created from the System Prompts section.

Best Practices

- Start Simple: Test with basic queries first to confirm foundational understanding.

- Explore Edge Cases: Challenge the assistant with ambiguous or complex scenarios to evaluate its robustness.

- Iterate and Improve: Use the Playground to identify gaps and continuously improve the assistant’s capabilities.

What’s Next?

After testing in the Playground, use the insights to refine your assistant’s training data or actions. Once satisfied with its performance, proceed to deploy the assistant or continue fine-tuning it using test cases and training workflows.