Test Cases

The Test Cases section allows you to manage and evaluate your AI assistant’s responses systematically. By running test cases, you can ensure the assistant is providing accurate, relevant, and high-quality answers to user queries. This section also provides detailed insights into the assistant’s performance across various metrics.

What are Test Cases?

Test cases consist of a message (query or input) and an expected response. These allow you to simulate real-world scenarios and assess how well the assistant performs. Test cases can include:

- Training Data Test Cases: Automatically generated for all questions in the training data.

- Custom Test Cases: Manually added for critical or edge-case queries specific to your application.

What happens when a Test Case is Run?

- Message: The test case message is sent to the assistant.

- Assistant Response: The assistant generates a response, which is saved for evaluation.

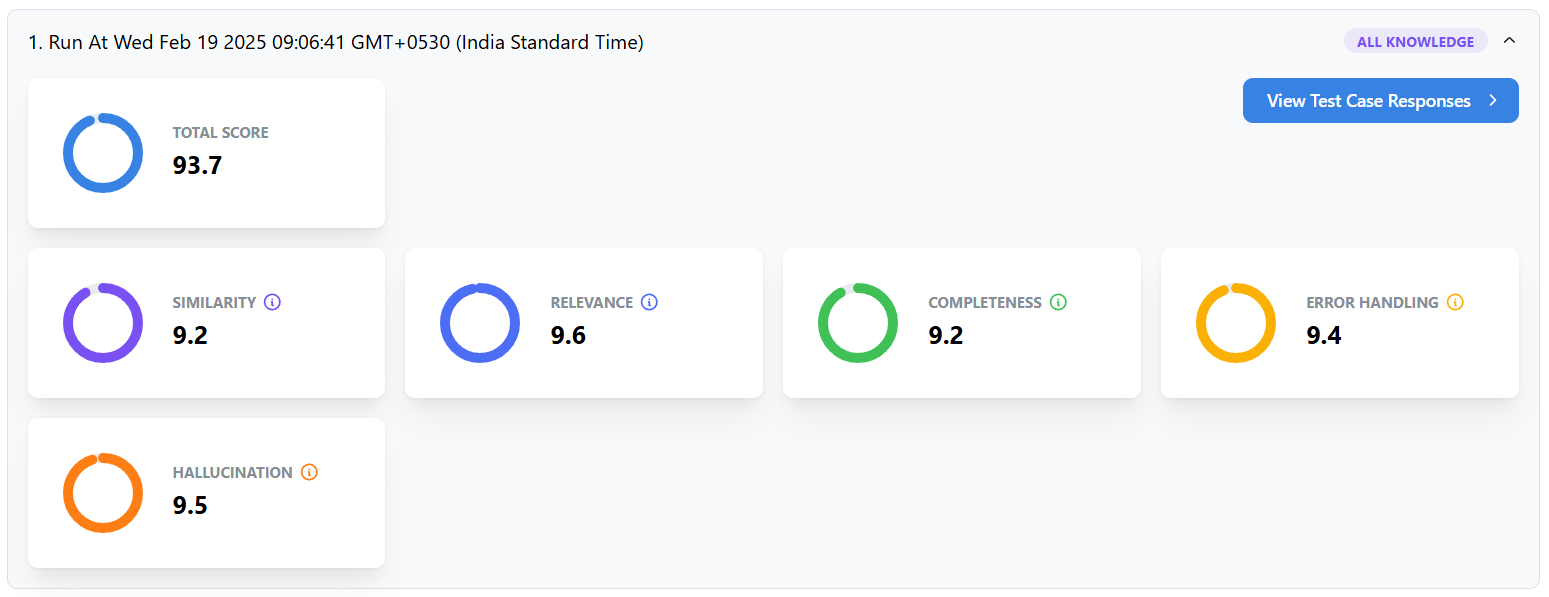

- Evaluation: A separate AI model analyzes the assistant’s response by comparing it to the expected response across several metrics:

- Similarity: Measures how close the meaning of the actual response is to the expected response.

- Relevance: Assesses how relevant the response is to the query.

- Completeness: Evaluates how much of the expected response was covered.

- Error Handling: Rates how well the assistant handles vague or incomplete questions.

- Hallucination: Scores how accurately the assistant avoids generating false or irrelevant information (a higher score indicates less hallucination).

The individual metric scores are combined to give a Total Score for each response. These scores are aggregated across all test cases to provide an Overall Test Score.

Handling Vague Questions

If a test case contains a vague or incomplete query, its score will not count toward the overall test score. Instead, the system tracks a Vague Count, showing the number of test cases flagged as vague.

What to do after all Test Cases are Run?

After running test cases, you can review:

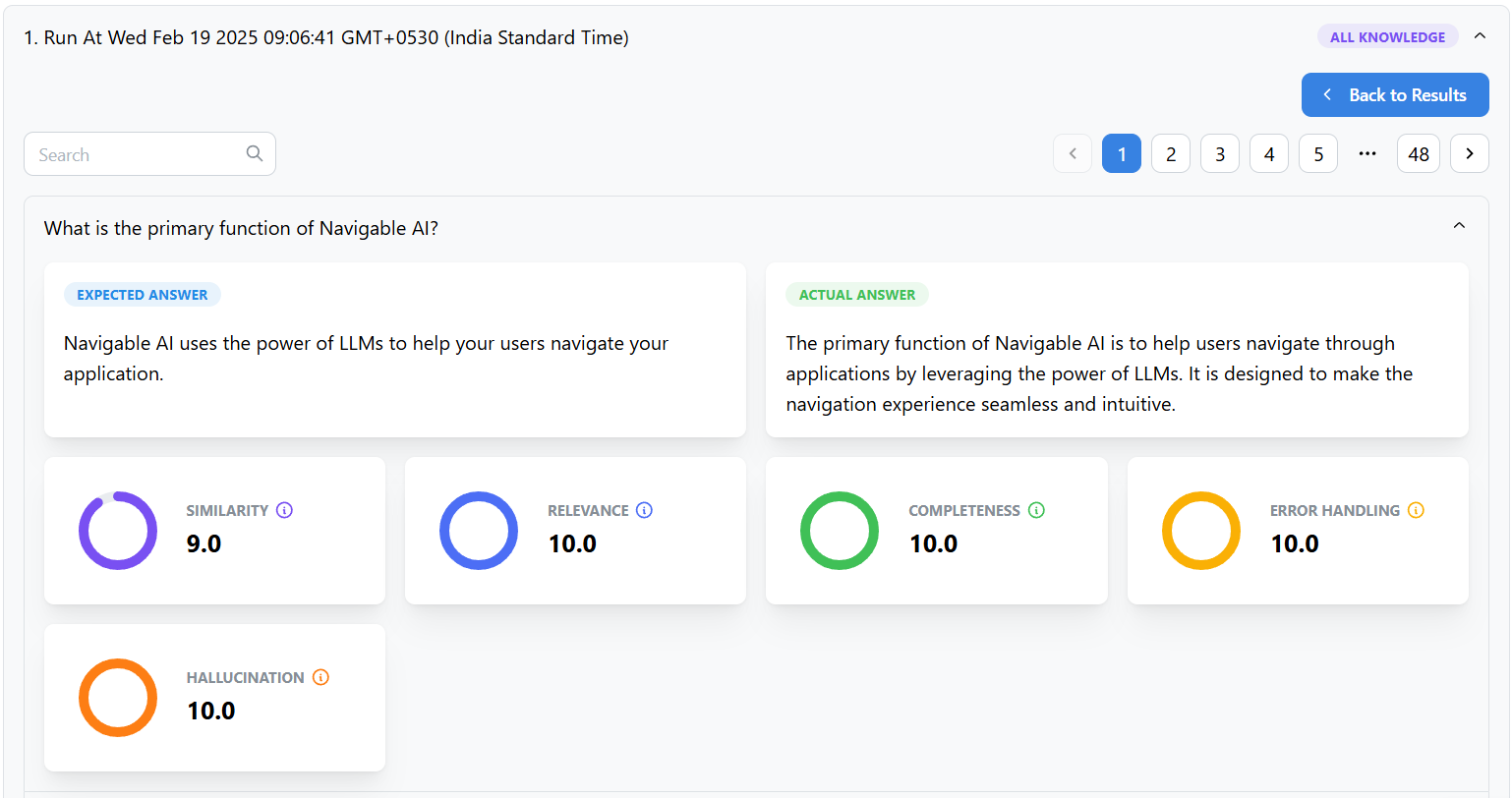

- Individual Test Case Scores: View detailed scores for each test case, including breakdowns by metric.

- Overall Test Score: Assess the assistant’s performance across all non-vague test cases.

- Vague Count: Identify and refine vague or incomplete test cases for more accurate evaluation.

Add a Test Case

-

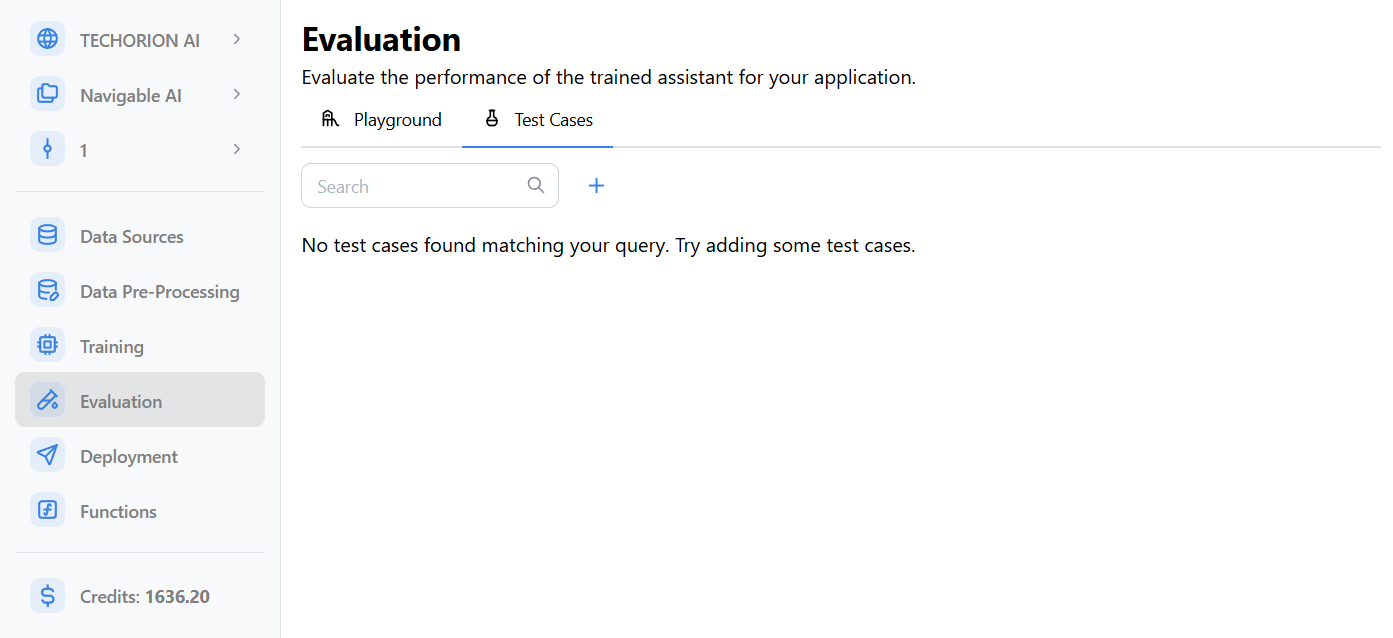

Navigate to the Test Cases tab in the Evaluation section.

-

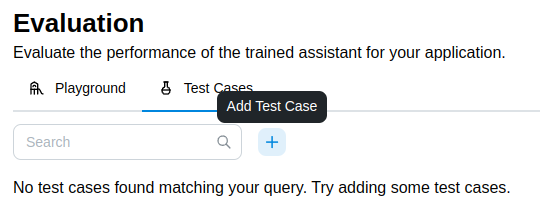

Click on the Plus icon in the top-left corner to add a new test case.

-

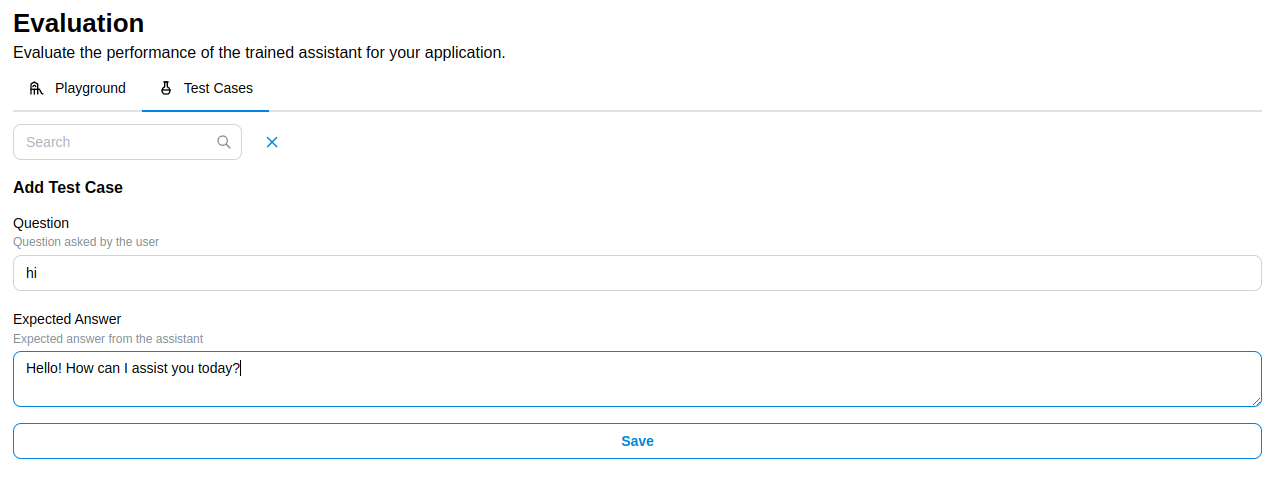

Fill in the Message and Expected Response fields.

-

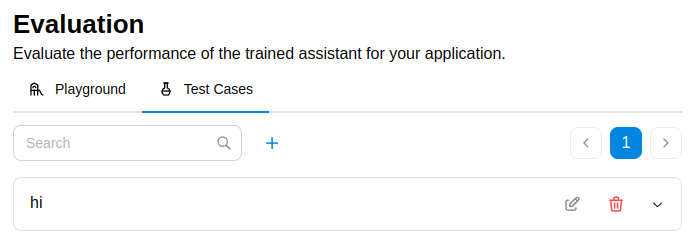

Click Save to create the test case. A success notification will confirm that the test case was successfully created and the test case will be added to the Test Cases tab.

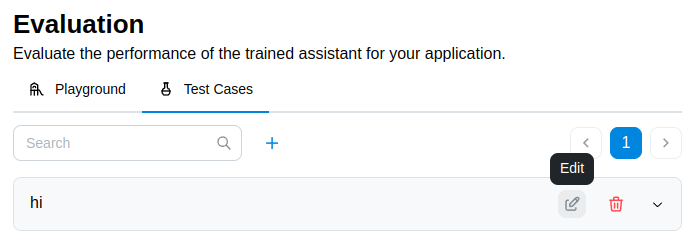

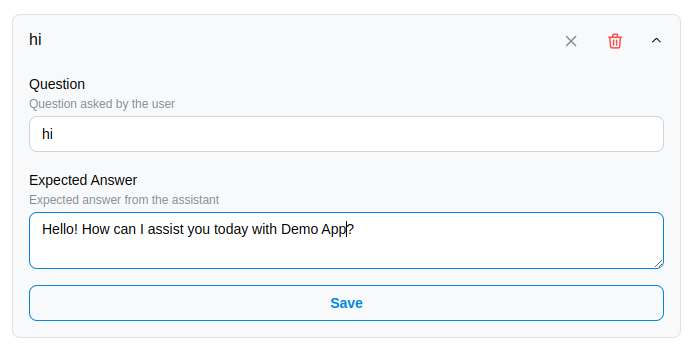

Edit a Test Case

-

Navigate to the Test Cases tab in the Evaluation section.

-

Locate the test case you want to edit.

-

Click on the Pencil icon on the top right of the test case.

-

Update the test case and click Save. A success notification will confirm that the test case was updated.

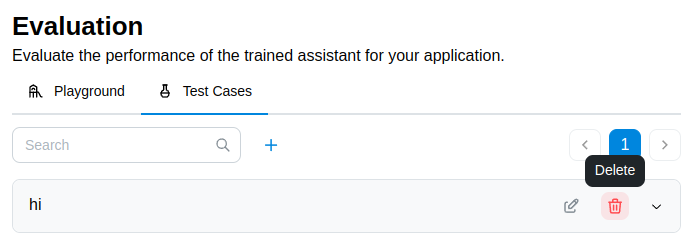

Delete a Test Case

-

Navigate to the Test Cases tab in the Evaluation section.

-

Locate the test case you want to delete.

-

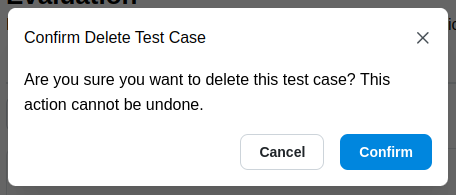

Click on the Trash icon on the top right of the test case.

-

Confirm the deletion in the modal that appears. A success notification will confirm that the test case was deleted.

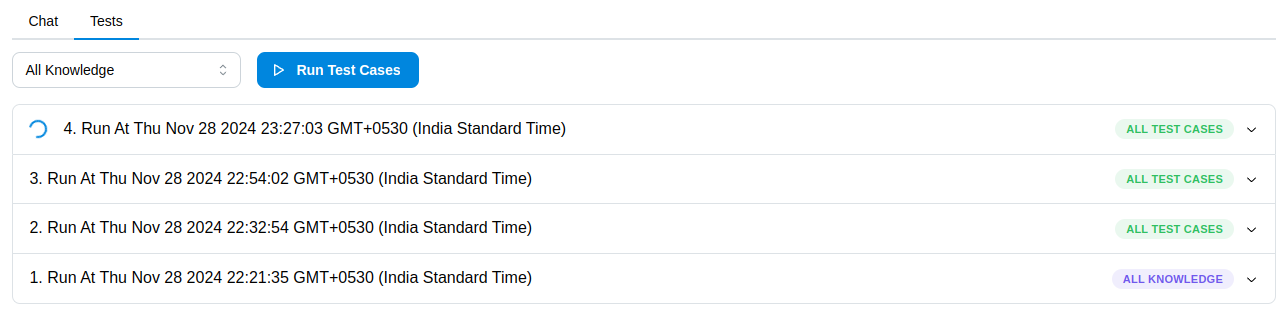

Running Test Cases

-

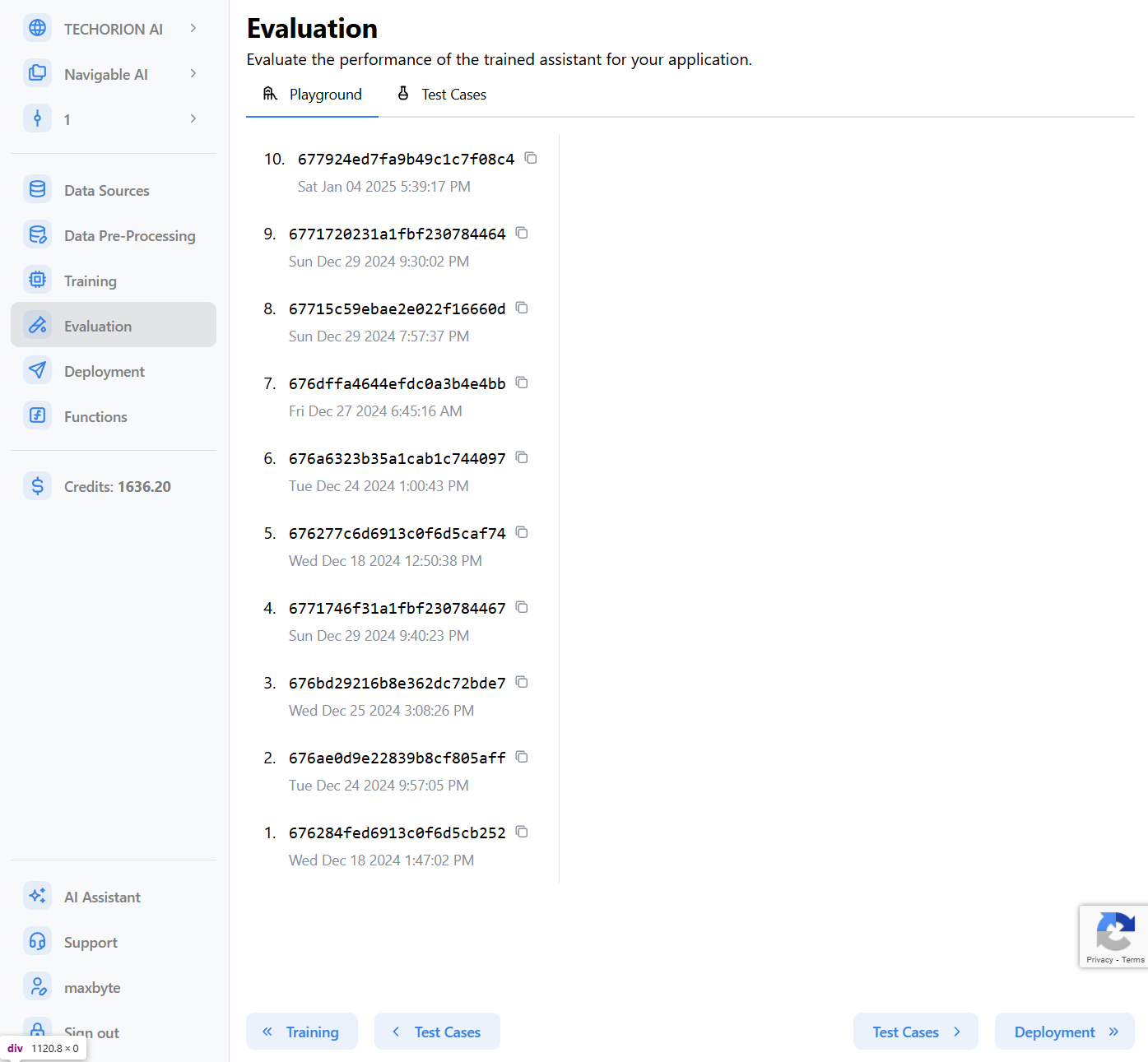

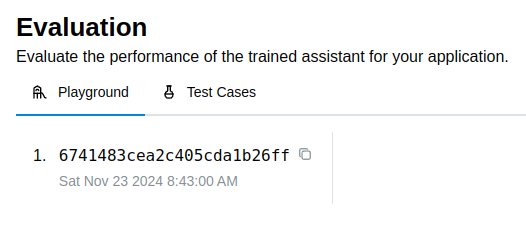

Navigate to the Playground tab in the Evaluation section.

-

Select the model you want to run the test cases on.

-

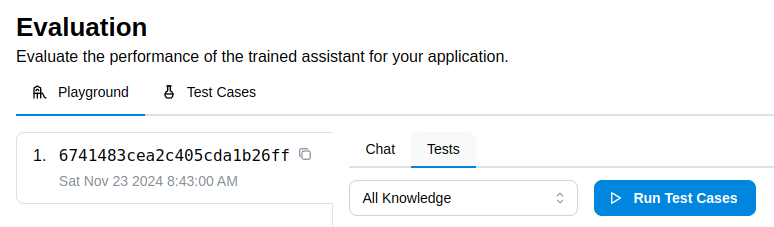

Navigate to the Tests tab.

-

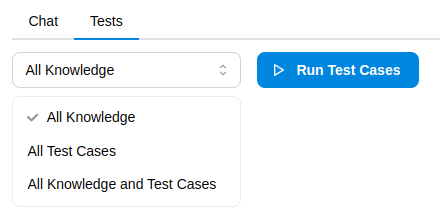

Choose the test set you want to run the test cases on.

-

Click the Run Test Cases button to run all test cases in current version.

-

The test cases will be executed, and the results will be displayed in the Test Cases tab. While the test cases are running, the test run will show a loading animation on the left.

Once completed, the results will be displayed in the collapsed test case run.

When evaluating using All Knowledge, a total score above 85 is considered good.

You also have the option to view each test case, it's response and evaluation metrics within each testcase. Click on the View Test Case Responses button to see the individual test case results.

Test set options

- All Knowledge: Run test cases for all knowledge in the project.

- All Test Cases: Run test cases for all test cases in the current version.

- All Knowledge and Test Cases: Run test cases for all knowledge and test cases in the current version.

Best Practices for Test Cases

- Comprehensive Coverage: Include test cases that address both common and edge-case scenarios.

- Realistic Queries: Use real-world examples to ensure the assistant is tested in practical situations.

- Iterative Testing: Regularly run and update test cases as you refine your assistant’s knowledge and training data.

Next Steps

While the test case evaluation is in-progress, you can skip to the Playground section to see the assistant responses in action.